In collaboration with the FAMU-FSU College of Engineering, the Center for Intelligent Systems, Control, and Robotics (CISCOR) conducts cutting edge robotics research in the realm of automated systems. Under this umbrella, lies the Scansorial and Terrestrial Robotics and Integrated Design Laboratory (STRIDE Lab) whose mission is to take inspiration from the natural mobility of animals to design and study legged robots.

STUDENT RESEARCHER, CISCOR - STRIDE LAB

MEET MINITAUR

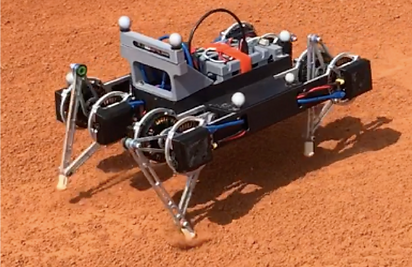

Most of the work I have conducted has been for the Minitaur platform. Minitaur is a quadrupedal legged robot designed by Ghost Robotics. Each leg is comprised of a five bar linkage (4 leg links + 1 ground link). This design allows for complex motion and unique control of its stride capabilities. Ultimately, the work done on Minitaur has been to establish a strong dynamic running gate that allows for traversal over various environments of unstructured terrain. My focuses have primarily been on the organization of hardware, ease of operation, and pose estimation of the robot.

HARDWARE ORGANIZATION

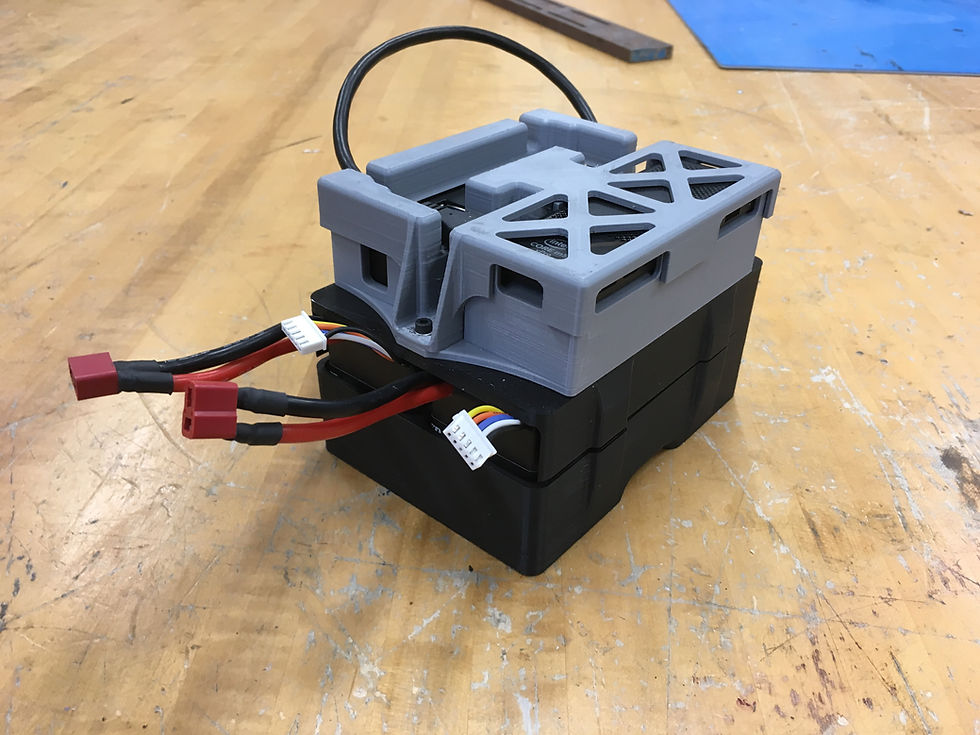

Initially, Minitaur was rigged to work, and work it did. However, it had become a mess of electronics in the process. Maintenance was difficult as most of the electronics would have to be removed to access the onboard computer (Intel Compute Stick) or exchange the batteries. To improve the organization of the electronics, a 3-D model of the Minitaur's body and hardware was constructed. Mounts and cases were then designed for the various components and assembled on the computer. Once a design was created that maximized the security of the devices, as well as, ease of operation, the cases were 3-D printed. To protect some parts from heat damage, thin foam was added for insulation purposes.

Pose Estimation

Understanding a robot's position and orientation is a fundamental problem in the world of robotics. Knowing where a robot lies in space is extremely important to better understand where you are relative to the final goal or dangerous obstacles. Furthermore, understanding your position and how your position changes with time are key aspects in understanding the motion of a robot.

The work I have done in the realm of position estimation has to do with Inertial Measurement Units (IMU), Video Odometry, and Map Generation. Ultimately, I have taken pre-existing Simultaneous Localization and Mapping (SLAM) software and implemented it with various sensor suites, such as the Intel Realsense Camera. Here camera feeds, depth perception fields, and accelerometer data work in parallel to provide the most accurate representation a platforms position. I wrote software to pull important values from sensor feeds and integrated them into kinematic calculations to achieve velocity and position estimates. These methods proved to be successful on our wheeled platforms. However, the high vibrations and oscillations of legged running created to much noise to yield accurate position data. Thus, this work proved to be a failure.

Current position estimation work that I am conducting, instead revolves around outdoor experiments and using RTK GPS.